Showing

- contributions/ds_infn_cc/infncc-net.png 0 additions, 0 deletionscontributions/ds_infn_cc/infncc-net.png

- contributions/ds_infn_cc/infncc-services.png 0 additions, 0 deletionscontributions/ds_infn_cc/infncc-services.png

- contributions/experiment/experiment.pdf 0 additions, 0 deletionscontributions/experiment/experiment.pdf

- contributions/farming/ARFarming2018.tex 78 additions, 50 deletionscontributions/farming/ARFarming2018.tex

- contributions/fermi/fermi.tex 4 additions, 4 deletionscontributions/fermi/fermi.tex

- contributions/gamma/gamma.tex 12 additions, 3 deletionscontributions/gamma/gamma.tex

- contributions/icarus/report_2018.tex 8 additions, 8 deletionscontributions/icarus/report_2018.tex

- contributions/juno/.gitkeep 0 additions, 0 deletionscontributions/juno/.gitkeep

- contributions/juno/juno-annual-report-2019.pdf 0 additions, 0 deletionscontributions/juno/juno-annual-report-2019.pdf

- contributions/km3net/km3net.tex 17 additions, 18 deletionscontributions/km3net/km3net.tex

- contributions/lhcb/lhcb.tex 29 additions, 32 deletionscontributions/lhcb/lhcb.tex

- contributions/lhcf/lhcf.tex 1 addition, 1 deletioncontributions/lhcf/lhcf.tex

- contributions/limadou/limadou.tex 3 additions, 3 deletionscontributions/limadou/limadou.tex

- contributions/na62/main.tex 2 additions, 4 deletionscontributions/na62/main.tex

- contributions/net/main.tex 47 additions, 23 deletionscontributions/net/main.tex

- contributions/newchim/repnewchim18.tex 10 additions, 10 deletionscontributions/newchim/repnewchim18.tex

- contributions/padme/2019_PADMEcontribution.pdf 0 additions, 0 deletionscontributions/padme/2019_PADMEcontribution.pdf

- contributions/pett/.gitkeep 0 additions, 0 deletionscontributions/pett/.gitkeep

- contributions/pett/bibliopett.bib 37 additions, 0 deletionscontributions/pett/bibliopett.bib

- contributions/pett/pett.tex 163 additions, 0 deletionscontributions/pett/pett.tex

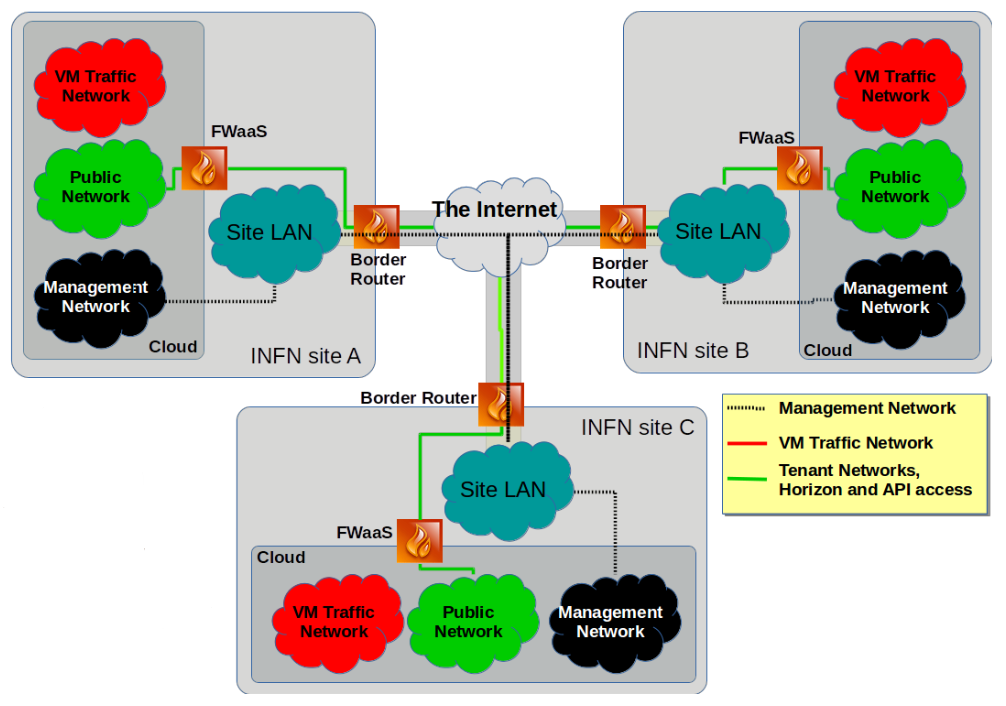

contributions/ds_infn_cc/infncc-net.png

0 → 100644

198 KiB

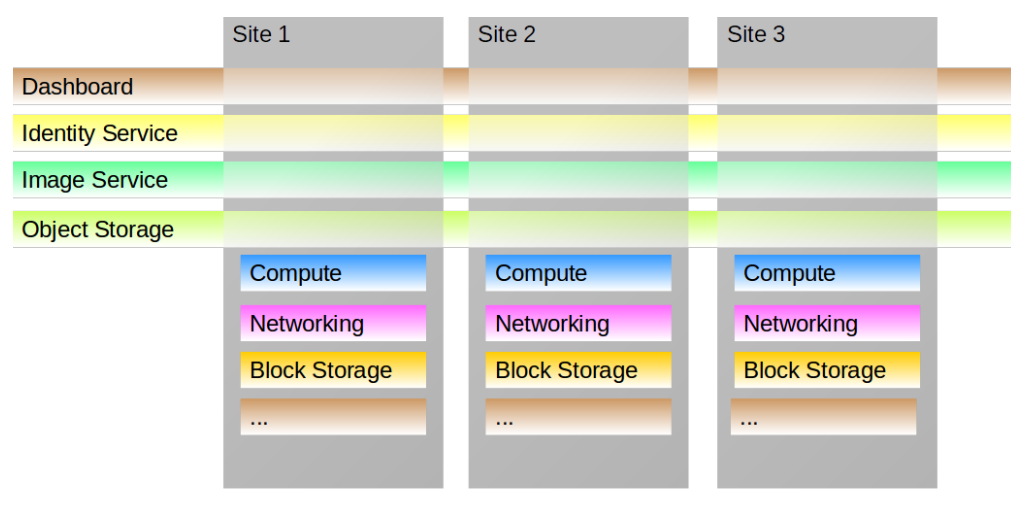

contributions/ds_infn_cc/infncc-services.png

0 → 100644

128 KiB

contributions/experiment/experiment.pdf

0 → 100644

File added

contributions/juno/.gitkeep

0 → 100644

File added

No preview for this file type

contributions/pett/.gitkeep

0 → 100644

contributions/pett/bibliopett.bib

0 → 100644

contributions/pett/pett.tex

0 → 100644