Showing

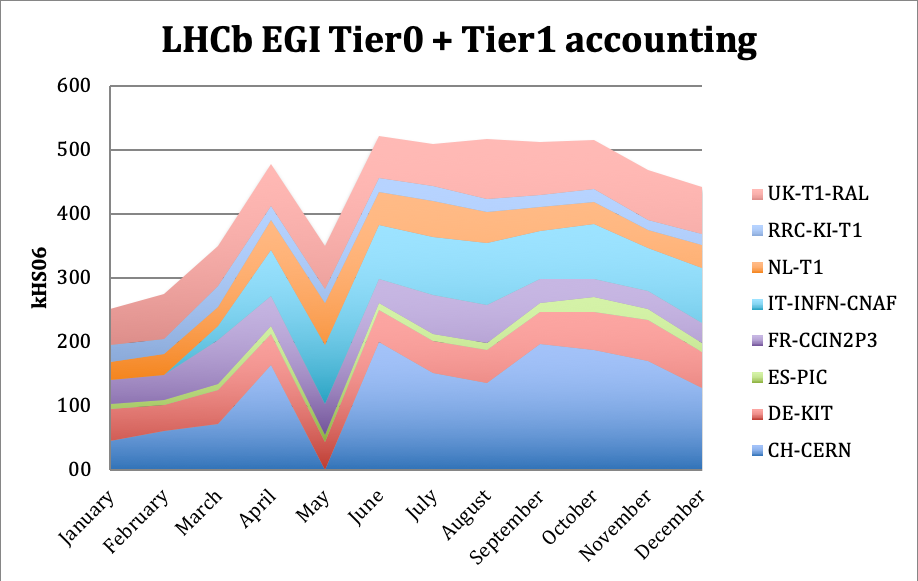

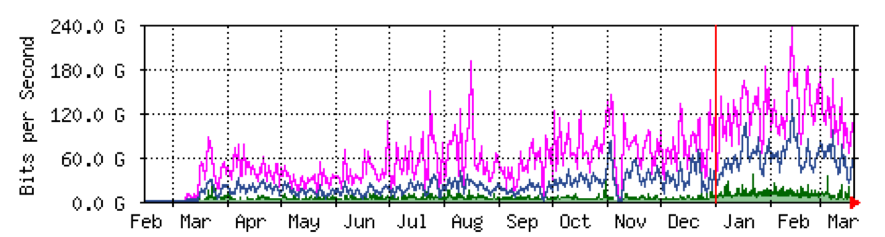

- contributions/lhcb/T0T1.png 0 additions, 0 deletionscontributions/lhcb/T0T1.png

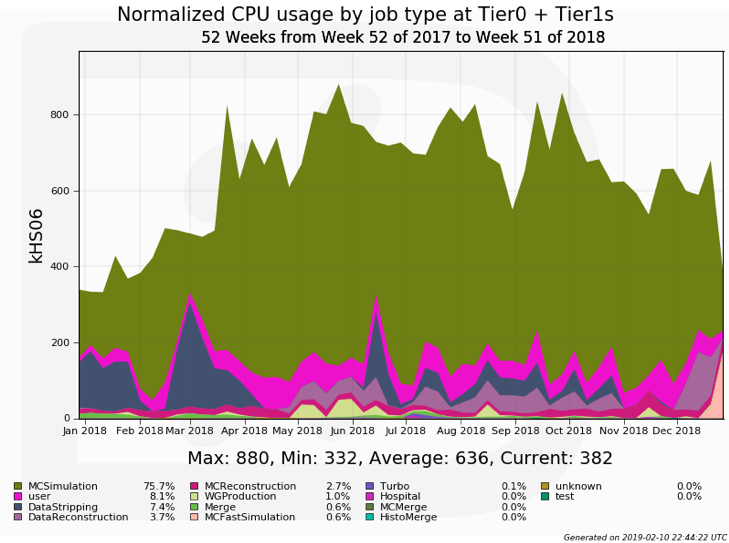

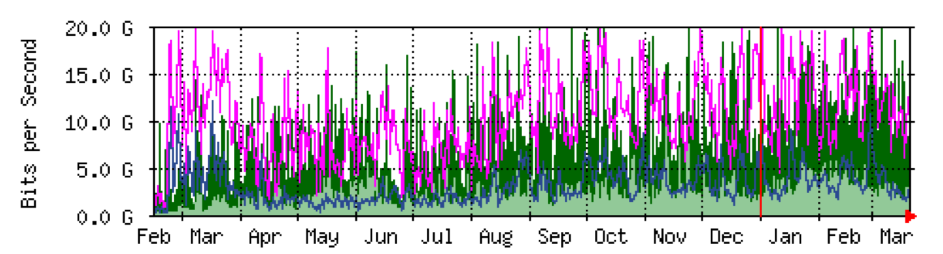

- contributions/lhcb/T0T1_MC.png 0 additions, 0 deletionscontributions/lhcb/T0T1_MC.png

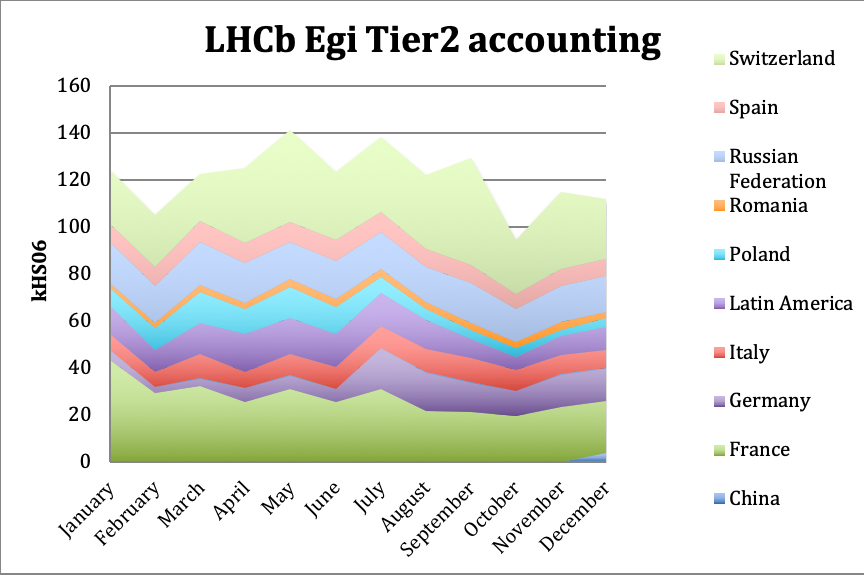

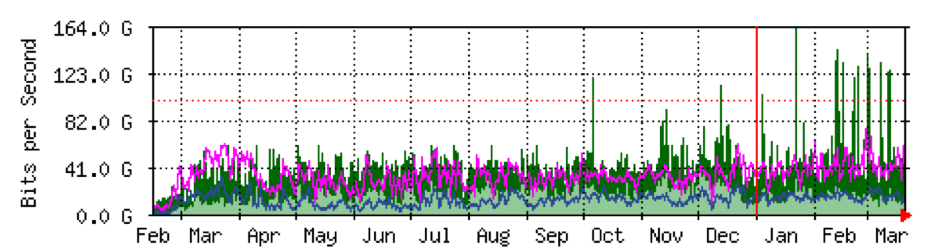

- contributions/lhcb/T2.png 0 additions, 0 deletionscontributions/lhcb/T2.png

- contributions/lhcb/lhcb.tex 237 additions, 0 deletionscontributions/lhcb/lhcb.tex

- contributions/lhcf/.gitkeep 0 additions, 0 deletionscontributions/lhcf/.gitkeep

- contributions/lhcf/lhcf.tex 53 additions, 0 deletionscontributions/lhcf/lhcf.tex

- contributions/limadou/limadou.tex 3 additions, 3 deletionscontributions/limadou/limadou.tex

- contributions/na62/.gitkeep 0 additions, 0 deletionscontributions/na62/.gitkeep

- contributions/na62/main.tex 65 additions, 0 deletionscontributions/na62/main.tex

- contributions/net/.gitkeep 0 additions, 0 deletionscontributions/net/.gitkeep

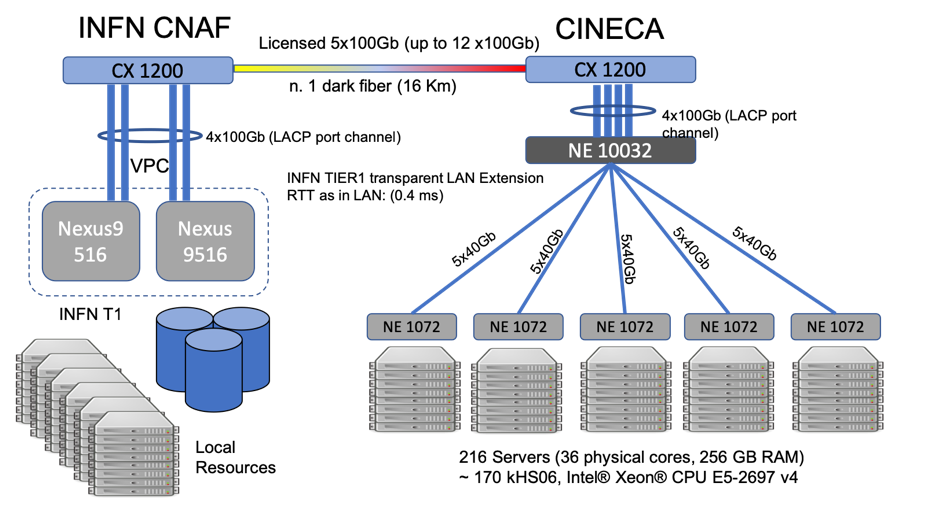

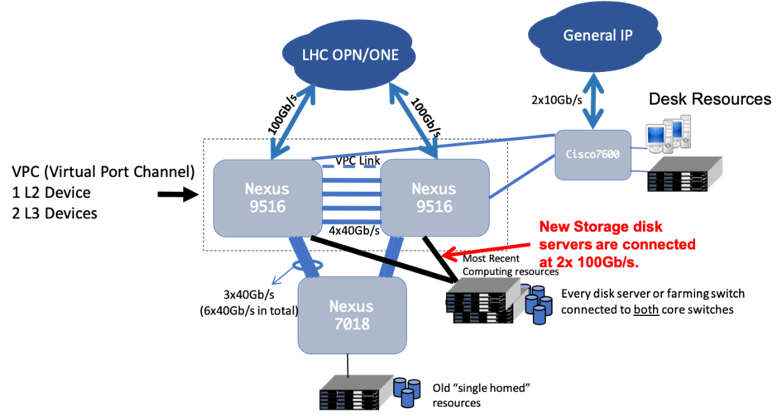

- contributions/net/cineca-schema.png 0 additions, 0 deletionscontributions/net/cineca-schema.png

- contributions/net/cineca.png 0 additions, 0 deletionscontributions/net/cineca.png

- contributions/net/connection-schema.png 0 additions, 0 deletionscontributions/net/connection-schema.png

- contributions/net/gpn.png 0 additions, 0 deletionscontributions/net/gpn.png

- contributions/net/lhcone-opn.png 0 additions, 0 deletionscontributions/net/lhcone-opn.png

- contributions/net/main.tex 173 additions, 0 deletionscontributions/net/main.tex

- contributions/net/net-board.png 0 additions, 0 deletionscontributions/net/net-board.png

- contributions/newchim/repnewchim18.tex 10 additions, 10 deletionscontributions/newchim/repnewchim18.tex

- contributions/padme/.gitkeep 0 additions, 0 deletionscontributions/padme/.gitkeep

- contributions/padme/2019_PADMEcontribution.pdf 0 additions, 0 deletionscontributions/padme/2019_PADMEcontribution.pdf

contributions/lhcb/T0T1.png

0 → 100644

178 KiB

contributions/lhcb/T0T1_MC.png

0 → 100644

146 KiB

contributions/lhcb/T2.png

0 → 100644

159 KiB

contributions/lhcb/lhcb.tex

0 → 100644

contributions/lhcf/.gitkeep

0 → 100644

contributions/lhcf/lhcf.tex

0 → 100644

contributions/na62/.gitkeep

0 → 100644

contributions/na62/main.tex

0 → 100644

contributions/net/.gitkeep

0 → 100644

contributions/net/cineca-schema.png

0 → 100644

217 KiB

contributions/net/cineca.png

0 → 100644

71.7 KiB

contributions/net/connection-schema.png

0 → 100644

117 KiB

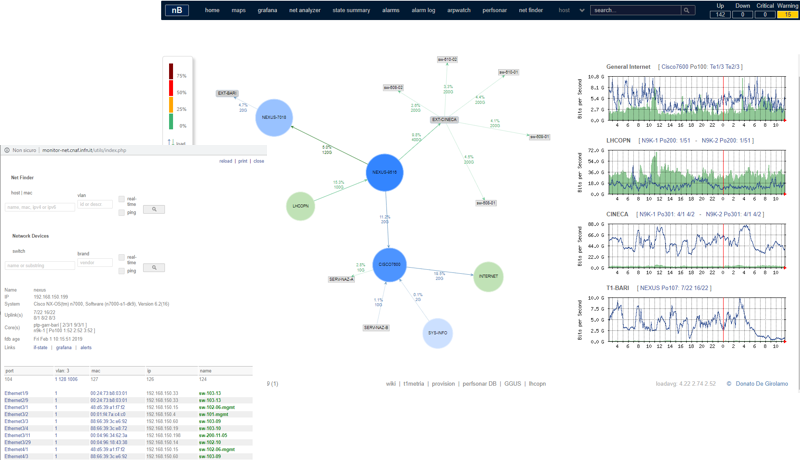

contributions/net/gpn.png

0 → 100644

95.3 KiB

contributions/net/lhcone-opn.png

0 → 100644

79.1 KiB

contributions/net/main.tex

0 → 100644

contributions/net/net-board.png

0 → 100644

170 KiB

contributions/padme/.gitkeep

0 → 100644

File added