Showing

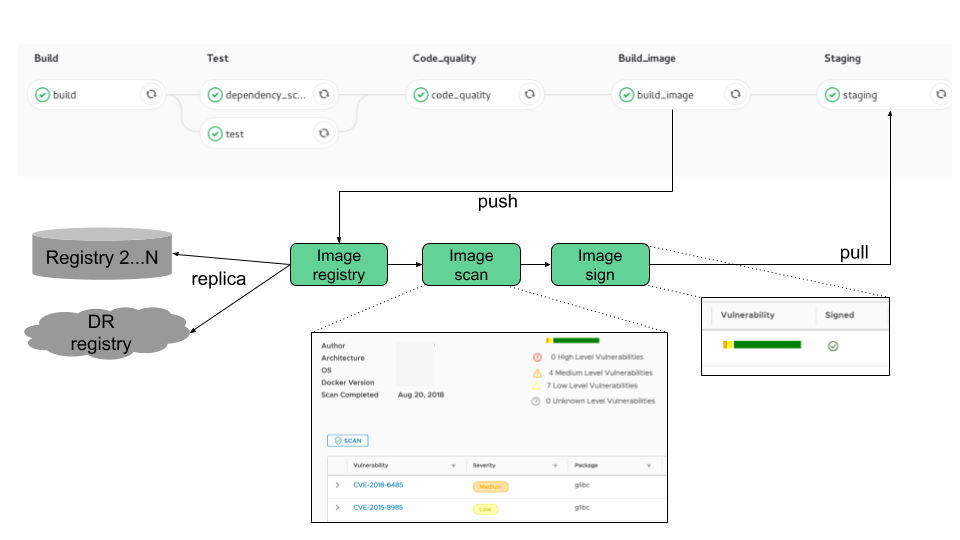

- contributions/sysinfo/container_ci.png 0 additions, 0 deletionscontributions/sysinfo/container_ci.png

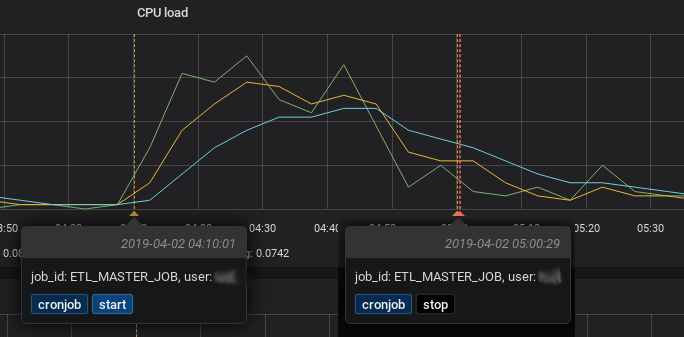

- contributions/sysinfo/cronjob_annotation.png 0 additions, 0 deletionscontributions/sysinfo/cronjob_annotation.png

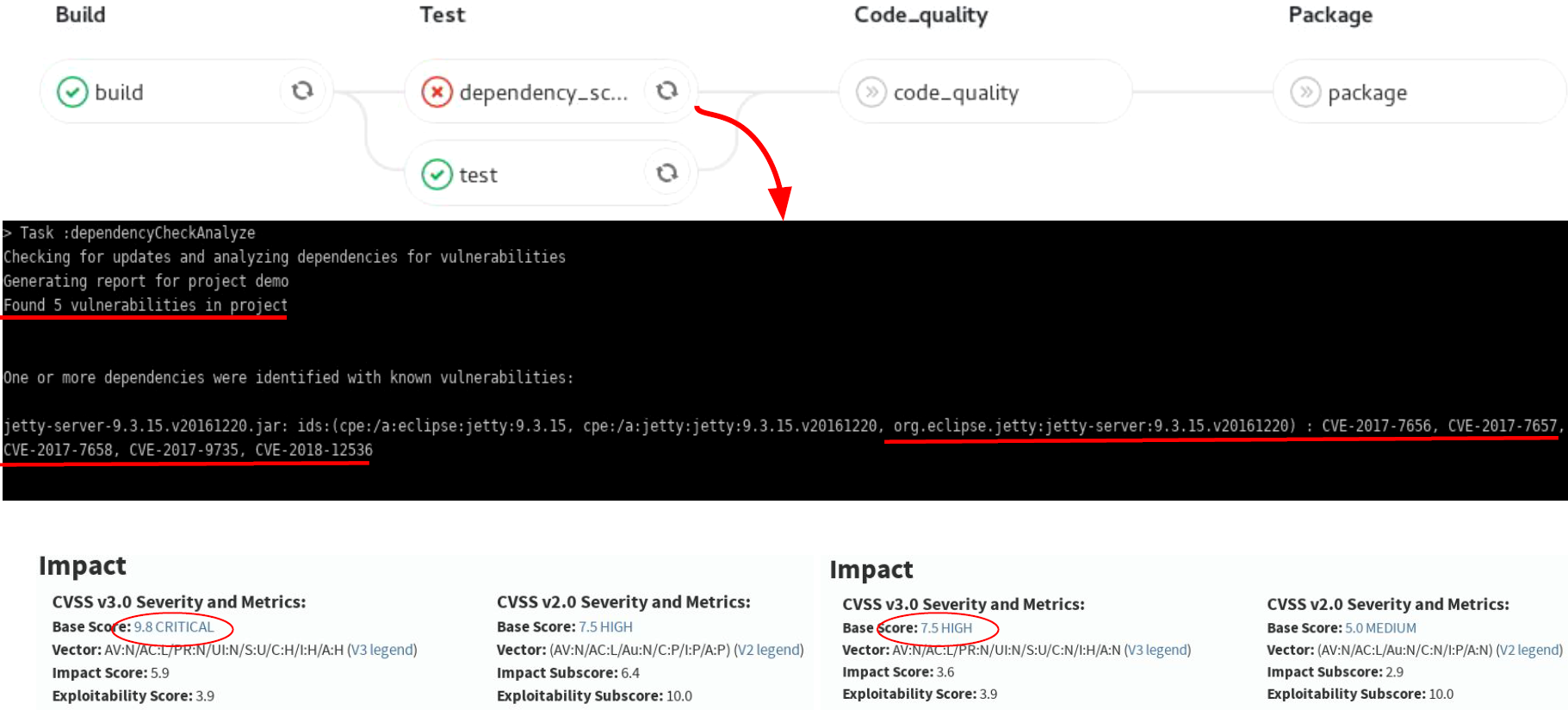

- contributions/sysinfo/deps_scan.png 0 additions, 0 deletionscontributions/sysinfo/deps_scan.png

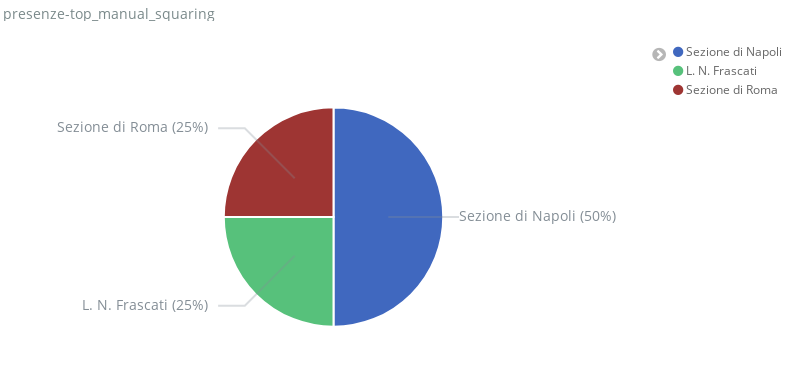

- contributions/sysinfo/presenze_kibana.png 0 additions, 0 deletionscontributions/sysinfo/presenze_kibana.png

- contributions/sysinfo/sysinfo.tex 172 additions, 0 deletionscontributions/sysinfo/sysinfo.tex

- contributions/test/TEST.tex 502 additions, 0 deletionscontributions/test/TEST.tex

- contributions/test/test.eps 16 additions, 0 deletionscontributions/test/test.eps

- contributions/tier1/.gitkeep 0 additions, 0 deletionscontributions/tier1/.gitkeep

- contributions/tier1/cpu2018.png 0 additions, 0 deletionscontributions/tier1/cpu2018.png

- contributions/tier1/disk2018.png 0 additions, 0 deletionscontributions/tier1/disk2018.png

- contributions/tier1/pledge.png 0 additions, 0 deletionscontributions/tier1/pledge.png

- contributions/tier1/tape2018.png 0 additions, 0 deletionscontributions/tier1/tape2018.png

- contributions/tier1/tier1.tex 230 additions, 0 deletionscontributions/tier1/tier1.tex

- contributions/transfer/transfer.pdf 0 additions, 0 deletionscontributions/transfer/transfer.pdf

- contributions/user-support/.gitkeep 0 additions, 0 deletionscontributions/user-support/.gitkeep

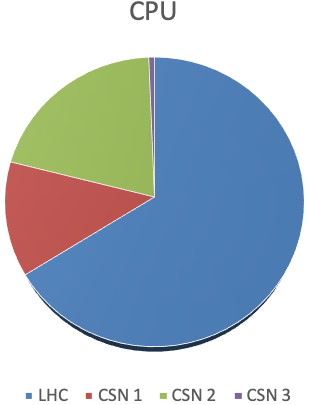

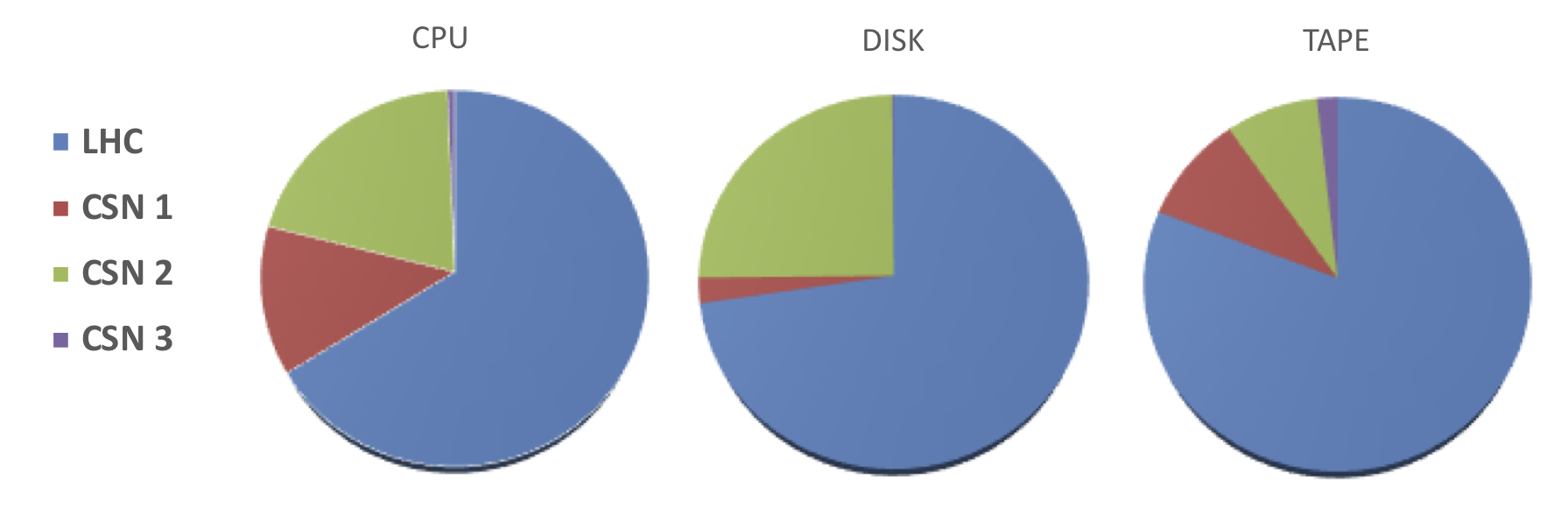

- contributions/user-support/cpu.PNG 0 additions, 0 deletionscontributions/user-support/cpu.PNG

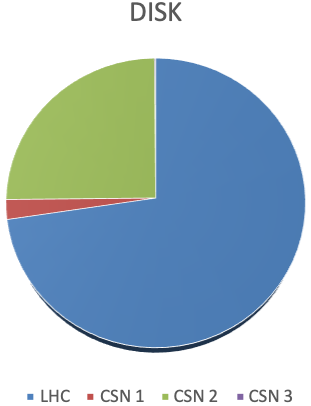

- contributions/user-support/disco.PNG 0 additions, 0 deletionscontributions/user-support/disco.PNG

- contributions/user-support/main.tex 79 additions, 0 deletionscontributions/user-support/main.tex

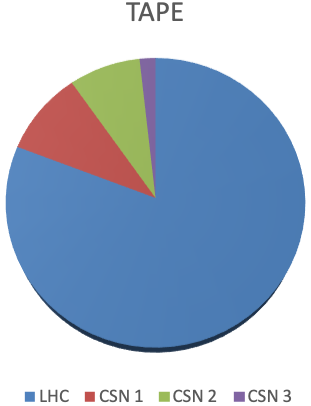

- contributions/user-support/tape.PNG 0 additions, 0 deletionscontributions/user-support/tape.PNG

- contributions/virgo/AdV_computing_CNAF.tex 67 additions, 0 deletionscontributions/virgo/AdV_computing_CNAF.tex

contributions/sysinfo/container_ci.png

0 → 100644

54 KiB

contributions/sysinfo/cronjob_annotation.png

0 → 100644

40.1 KiB

contributions/sysinfo/deps_scan.png

0 → 100644

4.3 MiB

contributions/sysinfo/presenze_kibana.png

0 → 100644

22.4 KiB

contributions/sysinfo/sysinfo.tex

0 → 100644

contributions/test/TEST.tex

0 → 100644

contributions/test/test.eps

0 → 100644

contributions/tier1/.gitkeep

0 → 100644

contributions/tier1/cpu2018.png

0 → 100644

27.8 KiB

contributions/tier1/disk2018.png

0 → 100644

28.4 KiB

contributions/tier1/pledge.png

0 → 100644

180 KiB

contributions/tier1/tape2018.png

0 → 100644

30.2 KiB

contributions/tier1/tier1.tex

0 → 100644

contributions/transfer/transfer.pdf

0 → 100644

File added

contributions/user-support/.gitkeep

0 → 100644

contributions/user-support/cpu.PNG

0 → 100644

62.7 KiB

contributions/user-support/disco.PNG

0 → 100644

30.1 KiB

contributions/user-support/main.tex

0 → 100644

contributions/user-support/tape.PNG

0 → 100644

23.4 KiB

contributions/virgo/AdV_computing_CNAF.tex

0 → 100644