sync from upstream

No related branches found

No related tags found

Showing

- build.sh 11 additions, 11 deletionsbuild.sh

- cnaf-annual-report-2018.tex 11 additions, 11 deletionscnaf-annual-report-2018.tex

- contributions/alice/.gitkeep 0 additions, 0 deletionscontributions/alice/.gitkeep

- contributions/alice/main.tex 319 additions, 0 deletionscontributions/alice/main.tex

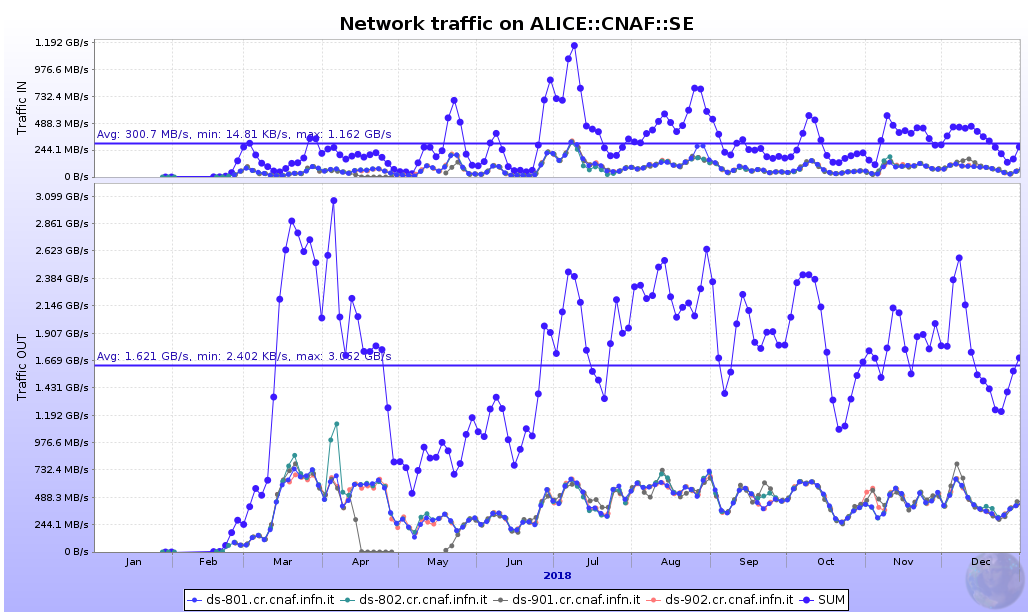

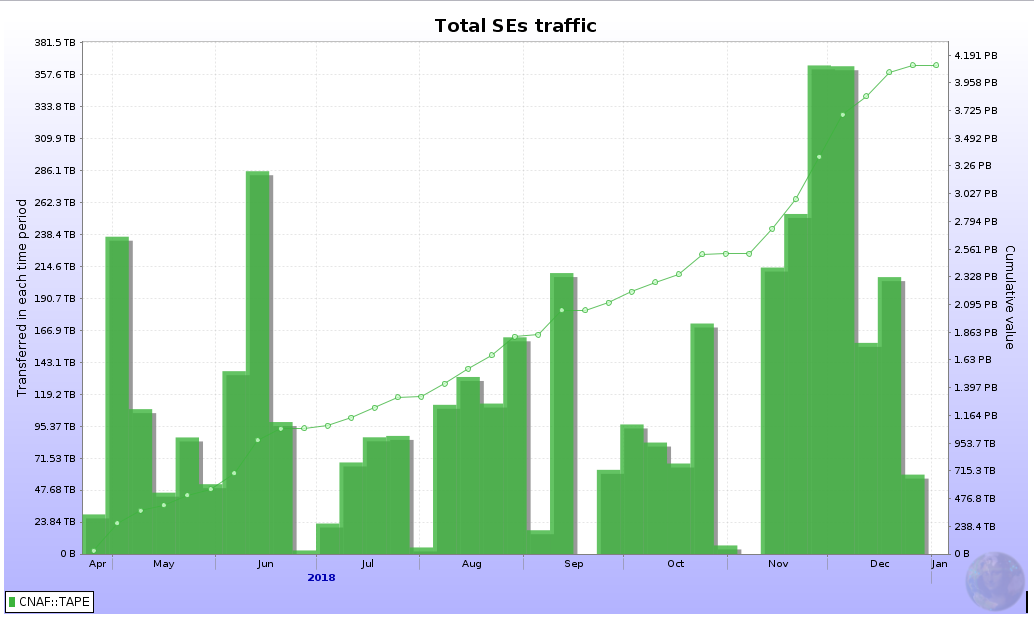

- contributions/alice/network_traffic_cnaf_se_2018.png 0 additions, 0 deletionscontributions/alice/network_traffic_cnaf_se_2018.png

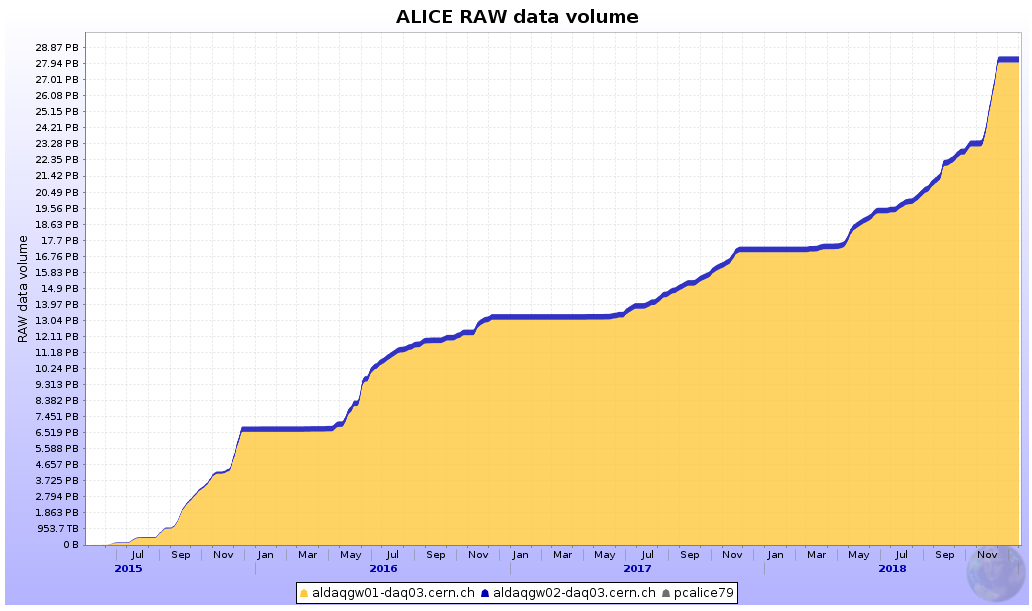

- contributions/alice/raw_data_accumulation_run2.png 0 additions, 0 deletionscontributions/alice/raw_data_accumulation_run2.png

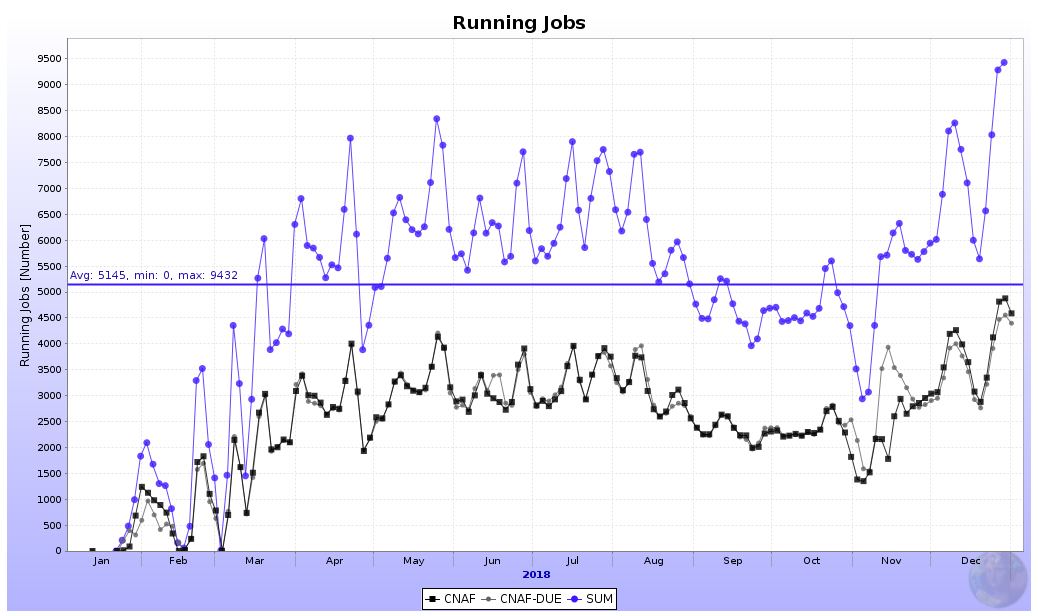

- contributions/alice/running_jobs_CNAF_2018.png 0 additions, 0 deletionscontributions/alice/running_jobs_CNAF_2018.png

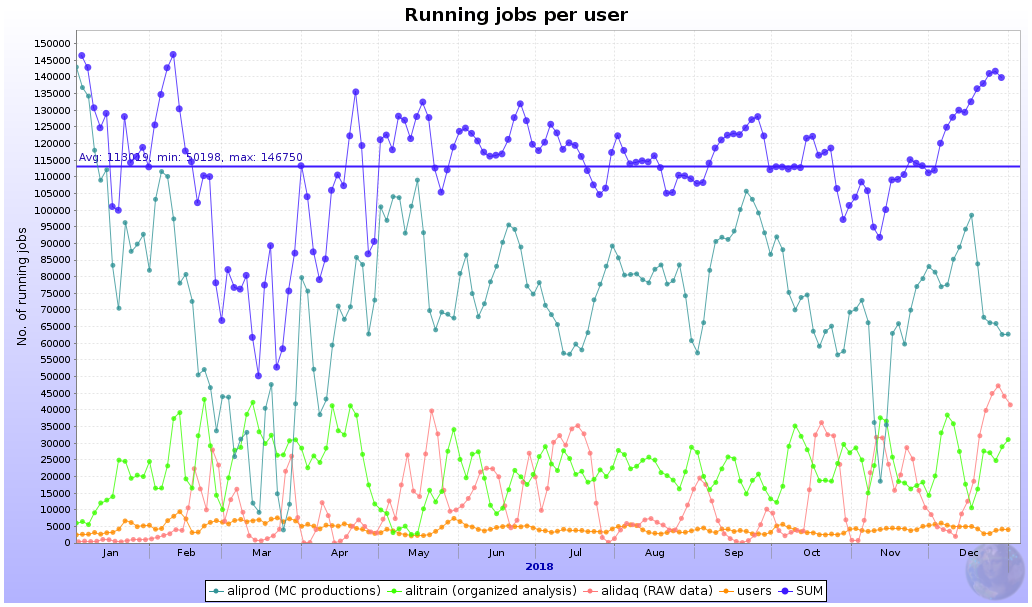

- contributions/alice/running_jobs_per_users_2018.png 0 additions, 0 deletionscontributions/alice/running_jobs_per_users_2018.png

- contributions/alice/total_traffic_cnaf_tape_2018.png 0 additions, 0 deletionscontributions/alice/total_traffic_cnaf_tape_2018.png

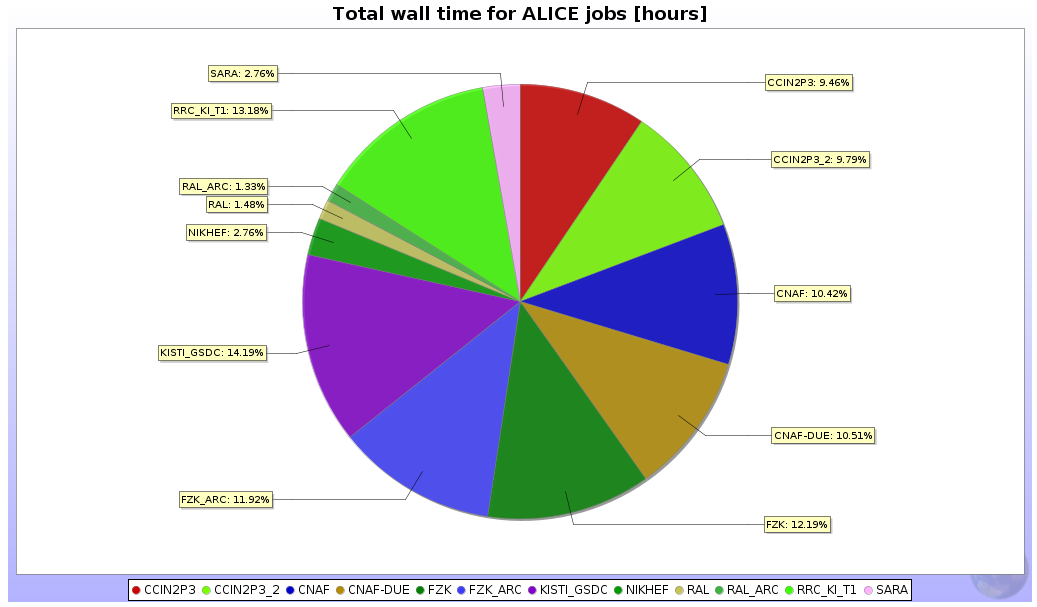

- contributions/alice/wall_time_tier1_2018.png 0 additions, 0 deletionscontributions/alice/wall_time_tier1_2018.png

- contributions/atlas/atlas.tex 108 additions, 0 deletionscontributions/atlas/atlas.tex

- contributions/audit/Audit-2018.tex 74 additions, 0 deletionscontributions/audit/Audit-2018.tex

- contributions/cta/CTA_ProjectTimeline_Nov2018.eps 18967 additions, 0 deletionscontributions/cta/CTA_ProjectTimeline_Nov2018.eps

- contributions/cta/CTA_annualreport_2018_v1.tex 146 additions, 0 deletionscontributions/cta/CTA_annualreport_2018_v1.tex

- contributions/cta/cpu-days-used-2018-bysite.eps 4115 additions, 0 deletionscontributions/cta/cpu-days-used-2018-bysite.eps

- contributions/cta/normalized-cpu-used-2018-bysite-cumulative.eps 4878 additions, 0 deletions...utions/cta/normalized-cpu-used-2018-bysite-cumulative.eps

- contributions/cta/transfered-data-2018-bysite.eps 5165 additions, 0 deletionscontributions/cta/transfered-data-2018-bysite.eps

- contributions/cupid/.gitkeep 0 additions, 0 deletionscontributions/cupid/.gitkeep

- contributions/cupid/cupid-biblio.bib 114 additions, 0 deletionscontributions/cupid/cupid-biblio.bib

- contributions/cupid/main.tex 60 additions, 0 deletionscontributions/cupid/main.tex

contributions/alice/.gitkeep

0 → 100644

contributions/alice/main.tex

0 → 100644

This diff is collapsed.

146 KiB

66.2 KiB

122 KiB

182 KiB

65.1 KiB

contributions/alice/wall_time_tier1_2018.png

0 → 100644

70.5 KiB

contributions/atlas/atlas.tex

0 → 100644

contributions/audit/Audit-2018.tex

0 → 100644

This diff is collapsed.

Source diff could not be displayed: it is too large. Options to address this: view the blob.

This diff is collapsed.

This diff is collapsed.

contributions/cupid/.gitkeep

0 → 100644

contributions/cupid/cupid-biblio.bib

0 → 100644

contributions/cupid/main.tex

0 → 100644